How do I compare AI visibility across different models and platforms?

- Also asked as:

- How do I see performance differences between ChatGPT and Gemini?

- Can I compare brand mentions across multiple AI models?

Scrunch recommends comparing AI visibility across different models and platforms by monitoring the same prompts across multiple AI platforms in aggregate, then filtering by platform to see where performance diverges.

Example

For example, imagine a Scrunch user wants to compare brand presence, competitive presence, position, sentiment, or citations across different AI platforms.

They could see AI search performance data in aggregate from the Home, Prompts Monitoring, or Citations tabs.

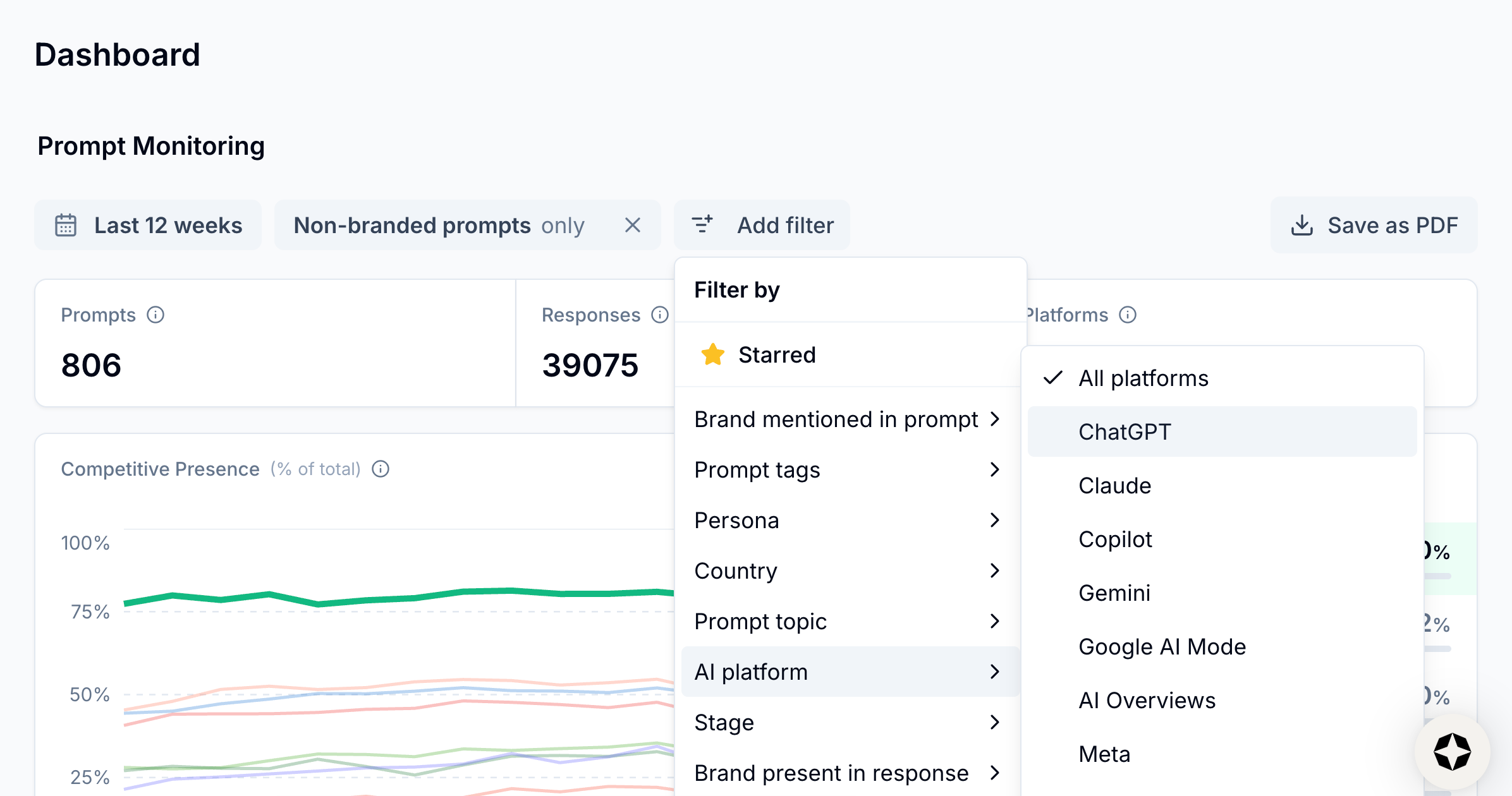

Next they’d filter their view of the data by AI platform (i.e., ChatGPT, Claude, Copilot, Gemini, Google AI Mode, Google AI Overviews, Meta, Perplexity, etc.).

Then they could compare how performance for one AI platform compares to another, as well as how it compares to performance on the whole.

Follow-up question: What should I do if my brand performs differently across AI platforms?

Scrunch recommends users prioritize optimization for AI platforms where they underperform but have high AI bot activity on their website. Users should focus on 1-2 high-priority topics where they should appear but aren't consistently featured, then use prompt-level analysis to identify content gaps.

If one platform consistently underperforms: Audit technical access to ensure AI bots can crawl critical webpages. If models can’t access content, optimization efforts won’t work.

Related FAQs

What benchmarks or baselines are useful when evaluating AI search performance?

Scrunch recommends tracking brand presence, citations, referral traffic, AI agent traffic, and share of voice versus competitors as key performance indicators.

How can I see if my visibility in AI search is improving or declining over time?

Scrunch recommends monitoring AI search trend data like brand mentions and citations consistently over 2-3 week periods to identify real trends versus one-off changes.

How many prompts should I track for AI search?

Scrunch recommends estimating prompt tracking needs using the following formula: X [# of core topics] x Y [5-8 questions related to each topic] = Z [# of AI search prompts to track]. The primary goal is to get a representative sampling of data across all customer journey stages via a mix of branded and non-branded prompts.